Edge Computing Migration

The latest advancements and diffusion of a wide range of smart devices increasingly more sophisticated and capable of differentiated forms of wireless connectivity has led to a pervasive presence of mobile devices in the every-day world. These devices are used in a wide range of contexts, from private mobile use to Smart City applications scenario where applications are focused on users’ engagement to solve tasks distributed everywhere in the environment.

As largely known in the related literature, mobile devices will grow and consolidate their position if and only if infrastructure and applications can benefit from standard solutions and virtualization, such as cloud computing which allows anyone to rig a virtual cluster with large-scale computational resources instead of an expensive dedicated server. Cloud computing is the perfect technology to overcome typical mobile devices limitations, in terms of: i) costs, to maintain, use and upgrade the system; ii) computing resources needed to manage and store a great amount of data, with the possibility to perform complex and long-term analysis; iii) scalability, avoiding traditional approaches to server provisioning, e.g. worst-case capacity planning, that lead to over-engineer platforms in order to ensure quality of service requirements met during peak load conditions, with a significant amount of idle computing resources under non-peak load conditions.

In order to develop adaptive and autonomous systems, as well as infrastructure able to support a pervasive mobile devices usage, also in busy contexts workload, a two-layer model only based on cloud and mobile devices may be too simplistic and inefficient. Cloud computing has not been designed to continuously collect and process a great amount of highly dynamic and heterogeneous data on the fly. Letting devices directly and independently communicate with the cloud might lead to a several issues: redundancy of requests and information traffic, extremely high energy consumption, high latency and location awareness due to the distance between cloud and mobile devices, mobility, security and privacy, etc.. In addition, many applications that operate in hostile environments execute computation-intensive tasks and require strict requirements, also exacerbating the highlighted issues. Hostile environments are characterized by very high uncertainty of the available resources such as computational capability and limited or inconsistent bandwidth, unreliable networks, and need rapid deployment. Typical communications protocols and mechanisms were conceived for well-conditioned, low-uncertainty environment that most Internet users experience today, rather than hostile environments that requires addressing worst-case assumptions. In other words, although mobile devices must offload computation towards significantly more powerful platform, in hostile environment they cannot rely on cloud computing both for unsuitability of cloud to support strict requirements e.g. quick actuations in case of anomalies, disconnection management and recovery or high mobility, and for uncertainty of network infrastructures that can cause unexpected disconnections.

In this scenario, as already starts to be recognized in the related literature, we believe it is necessary to add an additional intermediate layer between mobile devices and cloud, able to support all devices requirements and complement the cloud close to the devices. A Mobile Cloud Computing (MEC) layer aims to bring computation resources near the edge of the network to guarantee to cope the mobile devices requirements overcoming many cloud limitations, e.g. latency, mobility support, location-awareness, scalability, etc., and to allow to work properly also in hostile environments overcoming typical issues such as device recovery, continuity of service, low bandwidth or unexpected scenarios.

In this paper, we present an original solution to overcome the challenges of limited-resource mobile devices in hostile environments by using a Mobile Edge Computing (MEC) intermediate middleware layer. Our primary focus is to efficiently design and implement a MEC layer to assist devices to preserve their full functionalities and to supply service provisioning to final users also in case of high mobility in hostile environments. In particular, we have designed and implemented a support platform where highly demanding computation tasks on mobile devices can be delegated to the MEC layer that executes the tasks and returns the related results by preserving service continuity, also in case of end users’ mobility, thanks to proper virtualized function migration between MEC nodes. In addition, we complement typical reactive migration with simple predictive handoff mechanisms that move a specific virtualized function into the most suitable MEC node, based on the consideration of multiple aspects, such as network statistics, availability, and recoverability. In particular, we propose a dynamic proactive handoff scheme with motion prediction, towards the next forecasted MEC node, without waiting for users’ requests in the new MEC locality, in order to prepare in advance composed service provisioning and to drastically minimize the unavailability time due to virtualized function migration. To the best of our knowledge, this is the first implemented MEC platform, available for public download, that extends the widely accepted Elijah platform with hot migration of edge functions via proactive handoff mechanisms. We believe that our proposal is strongly original from several perspectives:

- design and implementation of an autonomous and transparent mechanism for highly mobile devices that allows service continuity without impacting device workload, through proactive Virtual Machine (VM) and container synthesis , as well as proactive handoff triggers, even between heterogeneous MEC nodes;

- accurate proactive handoff based on simple but effective forecasting of users’ movements to move and prepare a virtualized function at the new serving MEC node before a mobile service needs it, as well as, reactive behavior and functionality migration in case of unexpected user/device mobility;

- three-layer architecture usage to execute resource-intensive mobile services also in hostile environments with the MEC layer support, in a quality-aware and quality-oriented way, and, at the same time, providing cloud features, e.g., for longer-term analysis of collected sensing data;

- implementation based on promising, powerful, and open-source emerging platforms and tools, i.e., Elijah (for Openstack features to be used at MEC nodes), OpenCV, and LibSVM.

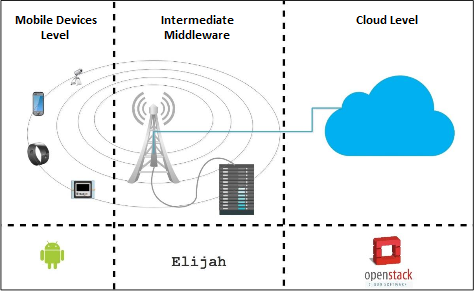

We present the architecture we design and build, describing each layer and how they interact among them. We design a solution that guarantees the ability to perform high-resource demanding computation on mobile devices with the support of MEC middleware layer, by providing the synthesis and handoff mechanisms for continuous services provisioning. As indicated previously, we adopt a three-layer architecture composed of mobile devices, MEC nodes and the cloud as illustrated in Figure 1 and explained in the following.

Mobile Device Layer

Our mobile device layer consists of all the endpoints that need to perform high-resource demanding executions of mobile services and do not have enough capabilities to do that. For instance, this includes heavy image and video analysis/processing that can be performed uniquely with computationally intensive techniques that require resources beyond the capability of mobile devices, at least taking into consideration possible application-specific requirements on response time. Our solution enables a very wide range of mobile device, with the only constraint, for the moment, to run Android OS, easily extendible in the future to any other mobile OS, to perform intensive computation tasks, independently from the specific mobile device, by completely decoupling the interaction between mobile devices and the tasks execution that take place on the MEC layer. In the application case running example used in the following sections, we support a face recognition application, for security purposes, able to monitor an environment with cameras that capture photos and videos of the surrounded area. To enable such a service to be practically useful in real-world scenarios, the processing time for any sensed media must be limited in the order of a very few seconds, to be able to raise alarms promptly in the case of suspicions. In a context like this, mobile devices often do not have enough capabilities to satisfy strict requirements on response time, in particular if considering their possible immersion in hostile environments; therefore, it is a must that they have to delegate most analysis functions to the MEC middleware layer.

MEC Middleware Layer

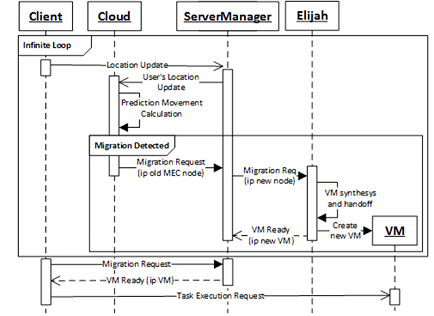

Our MEC middleware layer consists of two primary components: i) the standard Elijah platform, one of the current most promising and complete open-source project to realize the middleware layer, that allows moving computational resources near the edge of the network, by providing and orchestrating VMs near mobile devices; and ii) our original platform extension module, called ServerManager, that completely decouples mobile devices and Elijah, linking them by receiving and forwarding the users’ requests towards the Elijah platform and vice versa, thus intercepting and coordinating all interactions between mobile devices and MEC nodes. MEC nodes can be provisioned in either a proactive or a reactive way. Proactive provisioning allows to minimize and automate in an efficient way virtualized function migration, by pre-loading the needed functions in advance on the target MEC node that, presumably, will be the next visited by the served user; this is managed before receiving explicit migration requests, thus limiting the costs in terms of unavailability and performance during the procedures of mobile device handoffs and associated VM/container synthesis. The global cloud (third layer below) triggers virtualized function migration (because it is the cloud where predictions are typically performed when connectivity is available), by interacting with the ServerManager. On the contrary, reactive migration is triggered when a mobile device requests explicitly to move a virtualized function; this is the only possible solution when connectivity to the cloud is unavailable. Once triggered, the ServerManager checks the possibility to execute the virtualized function required on the MEC node and forwards to Elijah the request for the creation of a VM/container to execute the task. Finally, the ServerManager returns to the mobile device the reference to invoke the specified VM/container for future direct VM/container-to-device communications.

Cloud Layer

The cloud layer is used to assist the MEC intermediate middleware to provide: proactive analysis about users’ movements; base VM images and overlays used by the service, acting as a backup repository where newly created base images and new/updated overlays files are stored. In fact, our cloud layer has seldom updated snapshots of the complete status of the overall deployment environment, by receiving periodical updates by MEC nodes about users’ movements; it stores all users’ location history and performs high-computation predictions on cloud-stored location data. Our location prediction is probabilistic (over neighbor MEC nodes). If the detected probability of a user to change its current MEC node is higher than a configurable threshold, the cloud layer sends a request to the ServerManager on the new target MEC node to start the migration timely. In addition, the cloud layer is used during the initial service setup operations and in case of anomalies, to send missing files (see below) to the involved MEC nodes, thus acting as a global repository for MEC nodes. In fact, our cloud layer stores, with periodical snapshots, all the base VM/container images to use for node setup and all the overlays specifically created by MEC nodes for any supported mobile services. In our architecture, it is the cloud layer to have the responsibility to react and recover MEC nodes when anomalies or errors occur; the optimizations we include to reduce MEC node downtime in these cases are not described here because out of the scope of this specific paper.

Implementation

Elijah/Openstack++

Openstack is a project started in 2010 as a joint project of Rackspace Hosting and NASA and currently managed by Openstack Foundation. It is a well-known and widely diffused open-source IaaS that provides many services that interact with each other to deliver the full feature set and to be able to manage computation, storage, and networking resources to supply dynamic allocation of VMs. Elijah is an extension of the Openstack infrastructure, with very similar functionalities, and specifically targeted to run the intermediate middleware layer and to support limited-resources devices. Thus, it is possible to dynamically add or remove features to the platform, in the same way used in Openstack to add extensions on the default version, by adding custom files into standard paths, e.g. cloudlet.py, cloudlet_api.py, etc. In addition, since Openstack is a complex tool that contains all the typical functionalities required by cloud environments, Elijah also provides a more lightweight version, called standalone version. Elijah standalone version is completely uncoupled from OpenStack, allowing to test features in a much easier way and could be very suitable particularly for testing purpose and providing tools to perform VMs synthesis, without the handoff mechanism, starting from an overlay, independently from the other platforms.

Elijah is a notable MEC-oriented extension of Openstack with a relevant and growing community of MEC developers working on top of it. Elijah configuration is based on the Openstack installation, followed by the load of the Elijah extension libraries to specify the MEC platform. In our extended platform, we have modified the standard Elijah nova.conf configuration file with the specification of our CPU models, so to enable our resource-aware handoffs between heterogeneous hardware and to allow interoperability among different MEC nodes. In fact, since a deployed VM gets the characteristics of the host CPU through the hypervisor and the flags for the hardware virtualization, through the virsh APIs we can interact with the libvirt driver to manage the KVM/QEMU iterations and determine if CPUs are compatible and specifying them into the nova.conf file. The most relevant Elijah features at the base of our work are: base image import, base image resume, overlay creation, and VM synthesis:

- BASE IMAGE IMPORT: It is possible to import offline a VM base image into Glance to load in advance the base image that will be used to build each VM. Each base image is a compress file that contains: base disk image with the related hash value list; a memory snapshot with the related hash value list; is_cloudlet flag that indicates that is not a standard cloud image; libvirt configuration with the metadata that indicates the characteristics of the VM generated with the base image. The Elijah command to import a base image is cloudlet import-base

that decompresses the base image and stores it into Elijah database with the assignment of a unique hash to be identified unequivocally. - BASE IMAGE RESUME: A base image resume is still an offline operation and usually follows the import base image. During resume base image, a developer prepares a back-end server at the middleware layer and typically this phase includes: preparing dependent libraries, downloading and setting executable binaries, and changing OS and system configurations, as analogy happens in Openstack when a snapshot is resumed. To resume a base image, the Elijah platform uses a cloudlet hypervisor driver class, called CloudletDriver, that inherited the original LibvirtDriver and check if the metadata associate to the virtual disk image base has the is_cloudlet flag. In this case the driver resumes the base VM from the snapshot, rather than boots a new VM instance. Usually the first time a base image is resumed it takes a long time, in the order of a few minutes in relation to the hardware capability of the host, but since it can be executed offline, we perform it in advance preparing the MEC node before to receive the users’ requests. In this way, Elijah imports the base image into the cache of the compute node, thus, it does not slow down the system and is not significantly perceived by the users for further base image resumes. At the end of this operation, there is a VM ready to execute the service.

- OVERLAY CREATION: This feature aims to create a minimal VM overlay starting from a resumed or running instance and then compress and save the VM overlay in Glance storage for later download. VM overlay is able to create snapshots used later to resume the VM from a specific moment, by containing the delta between the client VM and the base image VM. It contains all the changes we need to add on the base VM to reproduce the client VM environment at the moment of the migration. This functionality has been added with the extensions mechanism, defining a new virtualization driver CloudletDriver class that inherits nova rpc.ComputeAPI. The Elijah command to create a customized VM based on top of the base VM is cloudlet overlay

. - VM SYNTHESYS AND HANDOFF: VM handoff allows VMs to migrate between different Openstack nodes. Since it involves two independent nodes, it is necessary that the user has the permission to access them, in order to call the APIs and they are contained into the message payload together with the destination URL. The command to execute the handoff is through a Python file, called cloudlet_client, that requires the UUID of the VM to migrate and the credential to access both the Openstack. It is possible to perform VM handoff only if the VMs have been synthesized. VM synthesis launches a new VM instance to the Openstack cluster. It uses an HTTP POST message with the overlay_ulr parameter and this message is handled at CloudletDriver hypervisor driver that manages the VM spawning methods to perform VM synthesis using the VM overlay and the VM base image. The synthesis mechanism is invoked with the commands synthesis_server for the server that starts to listen locally and synthesis_client with the specification of the server IP and the overlay URL.

ServerManager

The cloudlet server, on the MEC middleware, works along with and in support of Elijah platform. It is an application that interfaces the mobile devices requests with the Elijah platform, managing the resource and communication requests at the edge of the network and acting as a dispatcher for the users’ requests towards the MEC node. The cloudlet server is the component the mobile devices contact to use middleware layer services and, once a mobile device decides to communicate with the cloudlet server, a communication channel will be created between the device and the MEC node, specifying the services the mobile device needs. Successively, the cloudlet server checks MEC node status, communicates with Elijah and returns the response to the mobile devices. On the Elijah side, the cloudlet server communicates with it in two ways: for standard Openstack operations, with JCloud, a complete, portable and open-source project that provides a basic abstraction across Openstack Compute APIs; for Elijah operations, with the cloudlet_client Python command and provided by the Elijah team, that adds also the errors control on Elijah requests. On the mobile devices side, the cloudlet server publicizes its features by sending multicast messages containing the type and the description of the middleware service through a discovery server and can be autonomously discovered by devices. The discovery service uses Avahi, an open-source Zero Configuration Networking (Zeroconf). Zeroconf is composed of a set technologies that allows to automatically configure IP networks, in absence of configured information from either a user or infrastructure services, e.g. DHCP and DNS servers. Zeroconf implements some main functionalities: i) automatic network address assignment, by introducing a link-local method of addressing coupled with the IPv4/IPv6 auto-configuration mechanism; ii) automatic distribution and resolution of host names, with Multicast DNS (mDNS); iii) automatic location of network services to find services over the network though the DNS-SD process; iv) multicast address allocation, using the Zeroconf Multicast Address Allocation Protocol (ZMAAP). Avahi is one of the major Zeroconf implementation, based on Linux, that allows to locate the services inside the local network and a new host to view other hosts and communicate with them in the network. The Avahi daemon uses the core libraries to implement a DNS multicast stack accessible through the advertise of XML files in the /etc/avahi/services folder that exposes a service and its characteristics. It also uses nss-mdns and avahi-dnsconfd libraries to access the discovery system and to interact with the DNS via command line. In addition, Libavahi-client library adds to the original libavahi-core APIs the DNS multicast service, e.g. avahi-publish, avahi-brose, avahi-resolve.

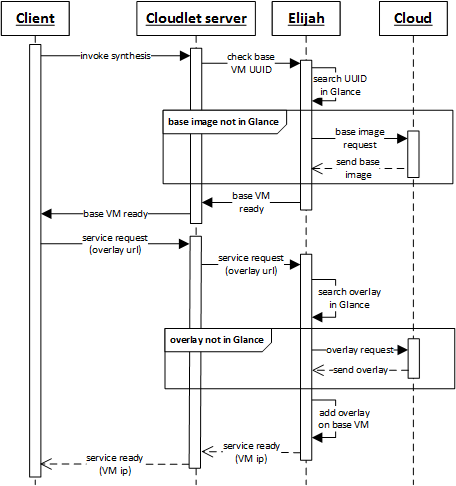

When a mobile device send requests, the cloudlet server checks on the MEC node how to serve them:

- Base image availability request. The cloudlet server checks the presence of the base image inside Glance with JCloud and returns to the client the availability or not of the target service. Usually the base images are in Glance and are pre-loaded by Elijah. Anyway, we insert this control in case of anomalies that may force Elijah to contact the cloud to import the base image from it.

- Service availability request. The cloudlet server checks the presence of VMs already created previously to be use for the service request. In case there are not any VMs available, the cloudlet server forwards the request to Elijah specifying the overlay and Elijah synthetizes a new VM starting the provisioning operations. At the end of the synthesis, the cloudlet server returns to the cloudlet client the IP address and port of the VM.

Finally, we use the Network Time Protocol (NTP), a widely-used synchronization mechanism among a set of distributed computer clock. Every cloudlet server runs a NTP client that synchronizes on the same NTP server, in order to synchronize two different MEC hosts and to allow a good reciprocal communication.

Migration

Our solution is based on the ability to automatically perform virtualized function migration in a proactive way, as already stated, by pre-loading and pre-configuring the needed VMs in advance on the right MEC node. In this way, when mobile devices actually perform their handoff, the ServerManager checks the VMs available and returns the IP and port to access those VMs by avoiding any communication/load to Elijah. Our predictive handoff is complemented by a reactive behavior, necessary to cope with the few cases when mobile devices request for the migration and the needed VMs have not been migrated in advance, e.g., in the case of unexpected users’ movements: service handoff is performed in this case via requesting the discovery of a nearby MEC node.

As depicted in Figure 1, our proactive migration stores location data (latitude and longitude) on the global cloud to compose temporal data series, by passing through the ServerManager. Periodically the cloud performs data analysis on those historical series, in order to predict next users’ locations. To this purpose, we apply a polynomial non-linear regression by using the LibSVM machine learning toolkit, which makes available a set of libraries for Support Vector Machine. We choose a regression-oriented technique, instead of other discrete statistical models, e.g., a Markov model (widely used in the literature for migration predictions), to have a more accurate forecast, depending on historical data and implicitly considering other elements, such as direction/speed of movement, temporal/spatial data locality, and delta between consecutive sensed locations. Let us note that regression analysis requires more resources than discrete models, for both storing large amounts of data and processing them; however, our MEC infrastructure does not suffer of performance degradation because this processing is performed on the global cloud and in offline mode. In particular, we use regression analytics results to predict the likelihood percentage of a given user to move under a given MEC node, by considering the subset of MEC nodes in the user’s proximity. Finally, when the cloud sends a migration request to the ServerManager, the VM migration takes place and Elijah is seamlessly integrated to execute both VM synthesis and handoff procedures through it.

VM Synthesis and Handoff

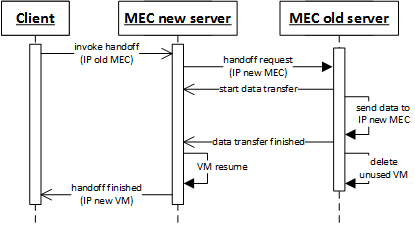

The ServerManager receives from the users or the cloud the migration request and forwards it towards the Elijah platform, with the indication of the base image to instantiate and the overlay to add. In this way, Elijah receives the specification of the service to create, though the base image, and the users’ modifications, though the overlay, thus, allowing to perform the live migration of stateful virtualized functions, at runtime, preserving service continuity by recreating on the destination node the same service used previously before the migration, comprehensive of the state. On the one hand, the time needed to synthesize a virtualized function may significantly vary in relation to which configuration and files are already available on the MEC node: i) Elijah has the base VM and retrieves the overlay locally at runtime, thus, can start to execute tasks to serve the mobile devices just after having added the overlay at the present base VM; ii) Elijah has the base VM but not a local overlay of the service and it needs to retrieve it from either clients or the global cloud; iii) or Elijah needs both the base VM and the overlay. VM synthesis allows a VM, that is running on one node, to freeze its state and resume at any node, at runtime, to execute near the mobile device. The synthesis is performed with the delivery of an overlay, specified with an URL by the mobile device, that applies the deltas over the base VM on the MEC node. On the other hand, VM handoff is our higher-level MEC platform feature that allows running VMs to migrate between different MEC nodes at runtime, typically to better support service continuity and to preserve service state notwithstanding MEC-related mobility. The handoff procedure is completely transparent to the client and only needs to invoke the handoff functionality by specifying the IP of the current MEC node to the new MEC one (to enable the retrieval of service state).

Figure 3 illustrates the sequence diagram of the VM synthesis, highlighting the messages sent by various components during the service provisioning and Figure 4 illustrates the sequence diagram of the VM handoff.

|

|

Virtualized Functions

After the base image resume, we obtain a VM that can be reached by the user but, before to be ready to serve user’s requests, we need to load the overlay. The overlay is mainly composed of OpenCV libraries that are loaded and configured by automatic scripts. We decide to leave the OpenCV environment into the overlay and not into the image base because the resulting overlay is however limited in size and, in case of multiple and heterogeneous services, we can reuse the same base image and apply different overlays in relation to the service we want to provide.

A virtualized function is generally created by applying the needed overlay (mainly consisting of the libraries characterizing the specific mobile service) on the VM created with the base image resume. In particular, in our running case study we extensively use OpenCV, i.e., an open-source library including a set of optimized algorithms to process media files, to detect and highlight human faces inside an image. OpenCV already contains the implementation of the Haar Classifier library for face recognition, based on several weak classifiers combined to produce the decision: the training procedure is composed of many weak classifiers, that perform simple checks, e.g. pixel difference operations, applied subsequently to a region of interest, until all the stages are passed, training them as a group to compose a strong and accurate overall classifier. The resultant classifier is applied to all the region of interest of the target image to check if any location is likely to show the searched object, i.e. a face. Finally, the server stores locally a copy of the image with a red box around faces, while the number of detected faces are returned to the interested mobile clients.

Mobile Services

In Figure 5 and 6 we illustrate the two Android applications that have been developed: cloudlet client, to manage the communications between MEC layer and mobile devices; openCV application, that is used by the users and wants to perform face recognition analysis.

|

|

The cloudlet client has many functionalities to manage the communication between mobile device and the cloudlet server. The “start discovery” button enables the reception of multicast messages from the cloudlet servers to be aware of the presence of a MEC node. The cloudlet client scans the suitable services exposed at MEC layer and retrieves the IP address and the port to connect to them. Cloudlet client application uses the Network Service Discovery (NDS) that allows users to identify cloudlet servers in the local network. With the NsdServiceInfo class, provided by Android, we set all the service parameters, e.g. name, type of transport protocol, and port, then we implement the RegistrationListener to detect any events related to the discovery and, in case the service found is correct, we retrieve the connection information with the resolveService method. Successively, with the “check base VM” and the “syn/check service VM” buttons, the cloudlet client requests to the cloudlet server the availability, respectively of the image base VM and the target service, on the MEC node and obtains the parameters to access the remote VM and interact with the OpenCV servers. Once the service is ready on the MEC node, the cloudlet client enables the "connect to OpenCV service" button, usually disabled unless a VM has been already used and is still ready to serve requests. By clicking on this button, we show a list of possible Android application that can be executed on the VM created select the application to use. In the hostile scenario considered, the service OpenCV can be interrupt unexpectedly at anytime by the device mobility that can lose the connection with the MEC node. In this case, the cloudlet client stores the history of all the MEC server used previously by the mobile device and with the “handoff to me” functionality allows to select the old MEC node containing the VM to migrate and invoke the handoff towards the new MEC node. Finally, the OpenCV application, we implemented, selects a picture and sends it to the target VM specifying the IP address and port previously obtained. After the application establishes the connection once, it does not require the cloudlet client anymore because it is already aware of the parameters to access the MEC node and it connects directly to the VM. The VM receives and processes the picture detecting the faces inside the picture, with OpenCV framework, returns the number of them and wait for the next request.

Experimental Results

The operational environment used to validate and assess the performance of both our MEC platform and the running case study consists of: one Android smartphone, one server on the cloud, and two MEC nodes with heterogeneous CPU, i.e., AMD Phenom II X4 965 and Intel Core i7 2640M, each with 8GB RAM, 100GB HDD. Each VM managed by our MEC platform in this operational environment is equipped with 1VCPU, 1GB Ram, and 8GB HDD. Interesting results relate to the many tests we did about synthesis and handoff. We consider an overlay retrieved locally in Glance, as it happens during normal system execution, that has a size of 106MB for newly created services with approx. 26MB of user’s data. During handoff, our MEC platform automatically splits this overlay into several chunks, of limited size to facilitate and parallelize their transmission. The vicinity of adjacent MEC nodes, highly distributed into the environment, and the generally powerful and stable connectivity among MEC nodes, as underlined in many work, have demonstrated the overlay transmission on the network to be an operation with negligible overhead in our experiments if compared with data compression/decompression; thus, below we focus mainly on computing capacity and overall node workload.

The network measurements have been performed with Iperf3, an open-source and multi-platform tool, while the resource are monitored with both Linux System Monitor and htop, an interactive and cross-platform system monitor and process viewer.

Synthesis Results

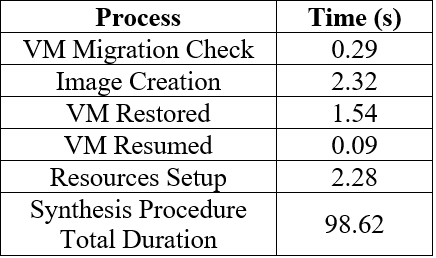

In Table 1 we report the average measured times of the main steps, that compose the synthesis procedure, we obtain after hundreds of runs. The standard deviation of the considered results is within 2%.

We consider the instant the cloudlet server receives the client’s request the beginning of the measurement. The cloudlet server checks if there are already VMs that can serve the mobile device request and if the MEC node has enough resource to create the VM needed by the user. The cloudlet server forwards the request to Elijah that creates in Glance the image needed to create the VM. The VM restored and resumed steps are normal operation to manage VMs that refers to restart the VM with the same state it had before the synthesis was invoked. After the VM is ready, Elijah loads all the resources needed and configuration, such as disk, memory, network and enables the listeners to the clients’ requests. Let us note that the total time to perform the synthesis procedure does not impact the system unavailability because it is performed before to migrate the service. In addition, the tests performed show the ability of the solution to perform synthesis between two heterogeneous host, in terms of hardware, using the overlay created by the Elijah or the standalone version interchangeable.

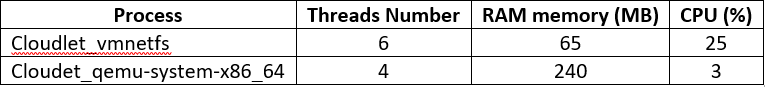

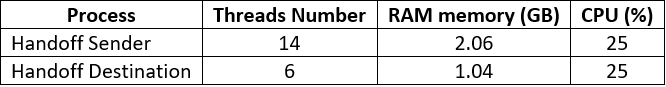

About the resource usage, we resume in Table 2 the number of threads, RAM and CPU percentage used by the two main processes executed during synthesis procedure.

The cloudlet_vmnetfs and cloudlet_qemu-system-x86_64 refer, respectively, to the process used to enable on-demand fetches of VM disk/memory, and to start VM before having entire memory snapshot.

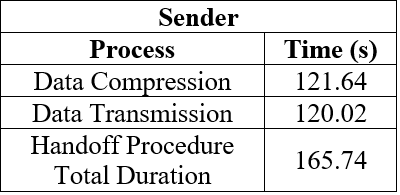

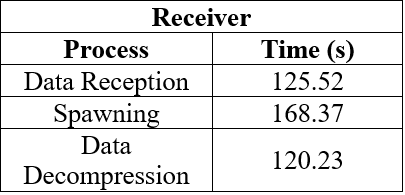

Handoff Results

The handoff tests are mainly related to demonstrate the ability of our solution to minimize: the migration time of the user’s data; the unavailability time of the MEC middleware during handoff. During handoff, the overlay to migrate is divided into several chunks with limited size to facilitate the network transmission by allowing parallel transmissions. Since the MEC nodes are in the same local network, the network bandwidth is very high and the data transmission can be considered an irrelevant operation, thus, the handoff time is manly related to data compression and decompression on the two nodes and are strictly related to the computational capacity and the overall workload of the two nodes. In addition, the MEC nodes synchronize themselves to exchange data, so the migration speed is equal to the speed of the slowest node. In Table 3 and 4 we resume the average measured time of the main steps, that compose the handoff procedure, both on sender and receiver node, that we obtain after hundreds of runs. The reported measurements have been obtained with tests performed by migrating VMs that have been processed the same number of requests from the clients before to execute the handoff, thus, with the same dimension. The average values have been calculated by inverting the sender and receiver hosts, thus, using each node both as sender and receiver in order to simulate the randomness related to the clients’ usage. The bandwidth of the local network used is 93.8 Mb/s. The standard deviation is still within 2%.

|

|

The sender starts the handoff when receives the migration request from the destination node, while finishes when all the data have been already sent to the receiver. On the contrary, the receiver starts the handoff when the it receives the requests by the mobile device and terminates when the VM with the service is resumed. As show in the experimental results, the processes that require more time are the compression and transmission of the data to the network. Let us note that the processes shown in Table 3 and 4 are performed simultaneously, in particular for the compression-sending and receiving-decompression phases.

Resuming some experimental considerations on the overall handoff procedure:

- 85 chunks transferred;

- 132.61 MB of data transferred between the two hosts for the considered overlay, slightly more than the startup overlay because it contains also data related to the executions;

- 172.52 s is the total time to perform all the handoff procedure from when it starts on the sender to when the service is ready on the destination host (standard deviation < 2%);

- 1.60 s is the total VM downtime that causes unavailability of the MEC service and service interruption for the client.

In Table 5 we show the resource usage about the number of threads, RAM and CPU percentage used, for both sender and destination hosts.

Cloudlet vs Cloud Latency Comparison

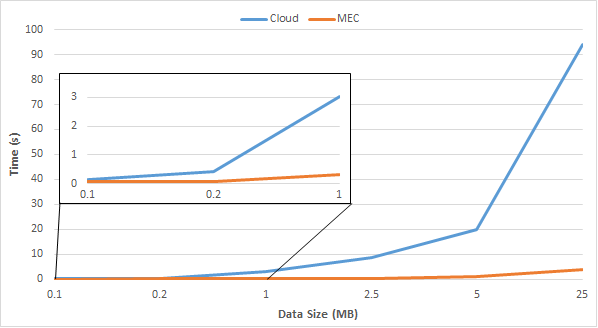

The main purpose of the created solution is to minimize the latency between mobile devices and remote execution server, thus, greatly decrease the response time of the cloud that is usually unsuitable for mobile devices in particular for strict application requirements or hostile environments. We test and evaluate latency drop in our solution where the execution is performed on MEC middleware at the edge of the network instead of on the cloud. We measure and compare the system performance to elaborate an image and reply to the mobile devices requests with the OpenCV platform both located on a MEC node or on the cloud. Since the main difference between MEC layer and cloud is the network throughput related to the fact that MEC layer and mobile devices are on the same local network at one-hop distance, the most significant test is related to the files exchanging performed when the mobile application send an image to be elaborated remotely. Thus, we measure the time the server needs to retrieve the image in relation to the image size. In Figure 7 we show the MEC layer and cloud latency comparison, considering that the available network bandwidth is respectively 53.3Mb/s and 2.44Mb/s.

Obviously, the MEC middleware is more responsive than cloud. The results are largely expected from the usage of the three-layer architecture that enables to minimize the response time. Let us note that if we load an image less than 1MB, the difference between MEC and cloud can be considered negligible and it is at maximum few seconds, while, after 1 MB-size image, the latency drastically increase and can cause very relevant slowdowns until tens of MB where the system is largely unusable. Thus, a two-layer device-cloud architecture is not viable even in case of normal operations where we need to send images of some MB because it introduces very high network delays.

Our experimental evaluations show the effectivity of our proposal in relation to the MEC technical challenges and highlight the deep gap MEC/cloud to meet mobile applications requirements. Although the live migration procedure introduces non-negligible latency, the mobility prediction allows to anticipate the migration requests with no-waiting time for users. In this way, we enable a wide range of mobile applications to offload services on the MEC layer and encouraging developers to adapt the applications structure to send a wider set of tasks for remote execution more frequently, also in case of hostile environment. In particular, we highlight: high mobility applications, that do not still suffer of the latency related to frequent migrations; low-latency applications, that benefits from both the nearby MEC interactions and the predictive migration; file-exchanging applications, that do not have to upload/download files towards the cloud avoiding, also with limited-size documents, relevant slowdowns or system unusability.

Contacts

Alessandro Zanni Ph.D. student in Computer Science

Michele Solimando Research Assistant

Our Mobile Middleware Research Group activities focus on the study and development of support solutions for a broad range of scenarios in the context of mobile networking and distributed services. ranging on a wide spectrum of subjects dealing with the creation of novel solutions supporting the development of distributed services that can be classified in the following categories: Cloud Computing, Mobile Computing, Context-based Systems, NGN & Future Internet Scenarios, Sustainable Infrastructures for Smart Environments

More information can be found here.

For any suggestions, comments or further details do not hesitate to contact me.

alessandro.zanni3 AT unibo.it

Department of Computer Science Engineering (DISI), University of Bologna

Via del Risorgimento 2, Bologna, Italy